The challenges of containerized architectures

They are helping organisations to modernise their legacy applications and create new cloud-native applications that are both scalable and agile. Container engines, such as Docker, and orchestration frameworks, such as Kubernetes, provide a standard way to package applications – including code, runtime, and libraries – and run them consistently throughout the software development lifecycle and independently of the underlying infrastructure (On premise, Cloud, Virtualised, HyperConverged…).

This standardisation of containers and their compactness make them a natural ingredient of cloud architectures. This is especially true since each container can be installed on a per-use basis according to business needs, and its ultra-fast start-up has little or no impact on execution. These characteristics are particularly well suited to the cloud, where billing is based on the memory allocated, the power used and the storage consumed. In addition, the portability of containers makes the transition from development to operation, from one cloud environment to another, more reliable, predictable and reproducible.

In conclusion, with containers, modern architectures backed by cloud resources have found their new trajectory, and the question is no longer whether container technology will be deployed but when will containers be more widely used than virtualised infrastructures ?

According to a recent Forbes article, container integration is growing faster than expected in the enterprise. A new report from Gartner confirms this: “By 2023, more than 70% of global enterprises will run multiple containerised applications in production, compared to less than 20% in 2019.”

This raises the question of why companies are going down the path of containers ?

The containerisation community and software are evolving rapidly. However, the enterprise IT landscape is not changing at the same speed, presenting both technical and cultural challenges to the integration and effective use of cloud-native technology and approaches.

One of the reasons for this growth is due to the portability of containers – a definite plus in today’s hybrid cloud world. Containers allow developers to move applications more quickly and easily from one environment to another. For example, you may want to build an application in one public cloud, move it to another for testing, and then move it to your data centre for production.

Another recent event that has helped to increase the popularity of containers is the rapid rise of Kubernetes, the open source container orchestration solution.

Over the past few years, Kubernetes has rapidly replaced a number of other private orchestrators, becoming the platform of choice for containers.

Finally, containers and microservices-based architectures are the key technologies among the latest generation of approaches and technologies to modernise the organisation and the enterprise IS landscape. The approach replaces traditional monolithic application development with a modern development ecosystem built on cloud, API services, CI/CD pipelines and cloud-native intelligent storage.

But how do you start a successful container implementation in your company ?

With the growing popularity of containers, every company should develop and adopt a container initiative.

Identify your strengths and develop technological expertise

Some departments may already be using Docker and/or Kubernetes, although this information is not shared with the central IT team. Just as in the years when the public cloud started, shadow IT was commonplace. The same thing is happening now with containers in the enterprise.

If you can’t find anyone who is already operating containers, create a team to do so. Look for people in your company who believe in a container strategy and are willing to learn.

Once you have identified them, encourage them and provide resources, starting small with a stand-alone environment not connected to the IS landscape, which will allow them to learn in a low risk environment.

Analyse your current application landscape

Containers have not always been the ideal solution for every type of application. The advantages of containers were previously their limitations for more dynamic applications. By design, containers were not meant to be persistent (or stateful). This makes them better suited to stateless scenarios such as web systems and DevOps test environments.

And while containers were originally stateless, which suited their portable and flexible nature, as containers became more popular, management solutions for stateful and stateless containers emerged. Today, most container storage is stateful and the question is no longer whether to use stateful containers, but when to use them.

Before implementing a container strategy, you need to have a good idea of your current applications and the type of environment they are best suited for. Decide whether you will create new applications or refactor them. If you are refactoring old applications, look for those that are self-contained and do not require other applications to run. These are the best candidates for containerisation.

Impact of containers on organisations.

Containers are of course only one technology, but it is a technology at the heart of the DevOps approach.

Obviously, the goal is not to create a completely new organisation that embraces DevOps, Agile principles and containerisation, but rather to adopt these principles within the existing organisation. Most organisations are reluctant because it is time consuming and involves some risk, and there is a natural resistance to change, existing processes and organisation (ITIL) and other barriers need to be changed to pave the way for containerisation.

One area that illustrates these impacts is container image management. Most organisations try to make Development or Operations responsible for what is inside a container. Unfortunately this approach is not effective and is counterproductive.

The container includes the application runtime plus the base operating system (operations) and the application itself (development). Both teams must share equal responsibility. This concept of shared responsibility does not really exist in silent processes and organisations, there is still a clear boundary between development and operations.

Because Docker images are composed of overlays, operations can provide base images (operating system runtime) and developers can consume these images and overlay their application. This allows both groups to work independently but share responsibility, the cornerstone of DevOps.

This is one of the reasons why containers are described as a technology that enables DevOps, and even moves towards DevSecOps.

Evaluate your data storage

A very important point to consider when creating a container strategy is to understand where your data needs to be located. Eventually you will need a data storage solution or platform that handles both stateful and stateless environments.

Legacy storage infrastructures may not meet these demands.

In fact containers are scalable in seconds, which means you could be managing thousands of containers at any one time. The Kubernetes orchestrator handles this perfectly but your storage needs to be smart enough to interact with it and adapt instantly.

Similarly, the interest of containers lies in their ability to run in very different contexts, so the storage must be able to adapt and follow the operating conditions of your containers.

Container life cycle management

Containers have an even greater potential for sprawl than virtual machines. This complexity is often intensified by numerous layers of services and tooling. Container lifecycle management can be automated through a close link to continuous integration / continuous delivery processes. Combined with continuous configuration automation tools, they can automate infrastructure deployment and operational tasks.

Scheduling should organise the deployment of containers to the optimal hosts in a cluster, according to the requirements of the management layer.

Kubernetes has become the de facto standard for container planning and management, with a vibrant community and support from most major commercial vendors. Enterprises will need to decide on the right usage model for Kubernetes by carefully evaluating the trade-offs between CaaS (containers as a service) and PaaS (platform as a service) as well as hybrid or native cloud.

Why is containerised networking different ?

Firstly, a container environment may need to interact with several thousand containers and therefore cannot be handled effectively with traditional network policies.

Static allocations are not compatible with volumes and a standard dynamic allocation (DHCP) that takes a few seconds or even minutes cannot meet the time and responsiveness requirements of a constantly changing containerised environment.

Furthermore, in a containerised environment, nothing is static. The configuration is constantly changing as containers are initialised and then destroyed. In fact, the management of access control lists is particularly difficult to apply in an environment where nothing is static.

All these challenges have solutions. Overlay networks, service discovery, identifiers and tags allow traffic to be routed through containerised networks without relying on a traditional IP network.

Understanding security issues with large-scale containerised applications

Security is a major concern for many organisations launching a container strategy. In a survey of 400 IT professionals, 34% noted that security was not properly addressed in their container strategies.

Containers by design promote application security by isolating their components and applying the principle of “least privilege”. But you’ll need to take additional steps to prevent “elevation of privilege” attacks on a larger scale.

Ensure that container security is a shared responsibility between your DevOps and security teams.

Protect containerised applications with disaster recovery and appropriate backups.

Wherever possible, configure container applications to run with an “unprivileged” access level.

To further protect sensitive data, keep it out of containers and opt for access via API.

Implement a real-time monitoring solution with advanced log analysis to perform better investigation of attack attempts. Indeed, the potentially very short lifetime of containers can make it difficult to detect or analyse an attack.

Securing a container-based infrastructure must cover a spectrum that extends from securing data, to hardening clusters and hosts, to securing the runtime (monitoring activity and blocking behavioural anomalies) or signing images.

Given the dynamic nature of container environments – and the many container, host and network surfaces that are susceptible to attack – standard practices such as vulnerability scanning and attack surface hardening are often not sufficient to achieve complete protection.

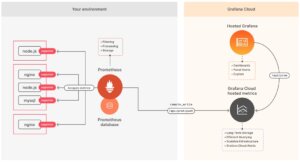

Monitoring and logging

Due to their ephemeral nature, containers are rather complex and more difficult to monitor than traditional applications running on virtual or bare metal servers. Yet monitoring is a critical step in ensuring optimal performance of containers running in different environments.

Container monitoring is not so different from traditional applications because in both cases you needed metrics, logs, service discovery and status checks. However, it is more complex because of their dynamic and multi-layered nature.

Container performance monitoring should take into account basic metrics such as memory usage and CPU usage, as well as container-specific metrics such as CPU limit and memory limit, but also the infrastructure of containers and clusters.

However, in addition to the infrastructure layer, there is also the application layer that comes into consideration for the container management strategy. However, you cannot collect these metrics from the container runtime, so this information will have to be collected elsewhere and pushed to the monitoring system.

Fortunately, various solutions and approaches are being developed to address this issue, but beware of underestimating the amount of data generated by monitoring agents and the various logs. A common way to address this issue is to limit the amount of data retention. Another approach used is to reduce the granularity of the metrics and reduce the precision, or sampling. As a result, teams have less accurate information with less time to analyse problems and limited visibility into one-off or recurring problems.